The Introduction to this series of blogs, HERE, sets out the background and goals.

There are many different senses in which people discuss ‘standards’. Sometimes they mean an overall judgement on the performance of the system as judged by an international test like PISA. Sometimes they mean judgements based on performance in official exams such as KS2 SATs (at 11) or GCSEs. Sometimes they mean the number of schools above or below a DfE ‘floor target’. Sometimes they mean the number of schools and/or pupils in Ofsted-defined categories. Sometimes people talk about ‘the quality of teachers’. Sometimes they mean ‘the standards required of pupils when they take certain exams’. Today, the media is asking ‘have Academies raised standards?’ because of the Select Committee Report (which, after a brief flick through, seems to have ignored most of the most interesting academic studies done on a randomised/pseudo-randomised basis).

This blog in the series is concerned mainly with the questions of – what has happened to the standards required of pupils when they take GCSEs and A Levels as a result of changes since the mid-1980s, and how do universities and learned societies judge the preparation of pupils for further studies. Have the exams got easier? Do universities and learned societies think pupils are well-prepared for further studies?

I will give a very short potted history of the introduction of GCSEs and the National Curriculum before examining the evidence of their effects. If you are not interested in the history, please skip to the Section B on Evidence. If you just want to see my Conclusions, scroll to the end for a short section.

I stress that my goal is not to argue for a return to the pre-1988 system of O Levels and A Levels. While it had some advantages over the existing system, it also had profound problems. I think that an unknown fraction of the cohort could experience far larger improvements in learning than we see now if they were introduced to different materials in different ways, rather than either contemporary exams or their predecessors, but I will come to this argument, and why I have this belief, in a later blog.

I have used the word ‘Department’ to represent the DES of the 1980s, the DfE of post-2010, and its different manifestations in between.

This is just a rough first stab at collecting things I’ve shoved in boxes, emails etc over the past few years. Please leave corrections and additions in Comments.

A. A very potted history

Joseph introduces GCSEs – ‘a right old mess’

The debate over the whole of education policy, and particularly the curriculum and exams, changed a lot after Callaghan’s Ruskin speech in 1976 and the Department’s Yellow Book. Before then, the main argument was simply about providing school places and the furore over selection. After 1976 the emphasis shifted to ‘standards’ and there was growing momentum behind a National Curriculum (NC) of some sort and reforms to the exam system.

Between 1979-85, the Department chivvied LAs on the curriculum but had little power and nothing significant changed. Joseph was too much of a free marketeer to support a NC so its proponents could not make progress.

Joseph was persuaded to replace O Levels with GCSEs. He thought that the outcome would be higher standards for all but he later complained that he had been hoodwinked by the bureaucratic process involving The Schools Examination Committee (SEC). He later complained:

‘I should have fought against flabbiness in general more than I did… I thought I did, but how do you reach into such a producer-oriented world? … “Stretching” was my favourite word; I judged that if you leant on that much else would follow. That’s what my officials encouraged me to imagine I was achieving… I said I’d only agree to unify the two examinations provided we established differentiation [which he defined as ‘you’re stretching the academic and you’re stretching the non-academic in appropriate ways’], and now I find that unconsciously I have allowed teacher assessment, to a greater extent than I assumed. My fault … my fault… it’s the job of ministers to see deeply… and therefore it’s flabby… You don’t find me defending either myself or the Conservative Party, but I reckon that we’ve all together made a right old mess of it. And it’s hurt most those who are most vulnerable.’ (Interview with Ball.)

I have not come across any other ministers or officials from this period so open about their errors.

The O Level survived under a different name as an international exam provided by Cambridge Assessment. It is still used abroad including in Singapore which regularly comes in the top three in all international tests. Cambridge Assessment also offers an ‘international GCSE’ that is, they say, tougher than the ‘old’ GCSE (i.e. the one in use now before it changes in 2015) but not as tough as the O Level. This international GCSE was used in some private schools pre-2010 along with ‘international GCSEs’ from other exam boards. From 2010, state schools could use iGCSEs. In 2014, the DfE announced that it would stop this again. I blogged on this decision HERE.

Entangled interests – Baker and the National Curriculum

In 1986, Thatcher replaced Joseph with Baker hoping, she admitted, that he would make up ‘in presentational flair what ever he lacked in attention to detail’. He did not. Nigel Lawson wrote of Baker that ‘not even his greatest friends would describe him as a profound thinker or a man with mastery of detail’. Baker’s own PPS said that at the morning meeting ‘the main issue was media handling’. Jenny Bacon, the official responsible for The National Curriculum 5-16 (1987), said that Baker liked memos ‘in “ball points” … some snappy things with headings. It wasn’t glorious continuous prose…[Ulrich, a powerful DES official] was appalled but Baker said “That’s just the kind of brief I want”.’

Between 1976 and 1986, concern had grown in Whitehall about the large number of awful schools and widespread bad teaching. Various intellectual arguments, ideology, political interests (personal and party), and bureaucratic interests aligned to create a National Curriculum. Thatcherites thought it would undermine what they thought of as the ‘loony left’, then much in the news. Baker thought it would bring him glory. The Department and HMI rightly thought it would increase their power. After foolishly announcing CTCs at Party Conference, thus poisoning their brand with politics from the start, Baker announced he would create a NC and a testing system at 7, 11, and 14.

The different centres of power disagreed on what form the NC would take. HMI lobbied against subjects and wanted a NC based on ‘areas of expertise’, not traditional subjects. Thatcher wanted a very limited core curriculum based on English, maths, and science. The Department wanted a NC that stretched across the whole curriculum. Baker agreed with the Department and dismissed Thatcher’s limited option as ‘Gradgrind’.

In order to con Thatcher into agreeing his scheme, Baker worked with officials to invent a fake distinction between ‘core’ and ‘foundation’ subjects. As Baker’s Permanent Secretary Hancock said, ‘We devised the notion of the core and the foundation subjects but if you examine the Act you will see that there is no difference between the two. This was a totally cynical and deliberate manoeuvre on Kenneth Baker’s part.’

The 1988 Act established two quangos to be what Baker called ‘the twin guardians of the curriculum’ – The National Curriculum Council (NCC), focused on the NC, and The Schools Examinations and Assessment Council (SEAC), focused on tests. Once the Act was passed, Baker’s junior minister Rumbold said that ‘Ken went out to lunch.’ Like many ministers, he did not understand the importance of the policy detail and the intricate issues of implementation. He allowed officials to control appointments to the two vital committees and various curriculum working groups. Even Baker’s own spad later said that Baker was conned into appointing ‘the very ones responsible for the failures we have been trying to put right’. Baker forlornly later admitted that ‘I thought you could produce a curriculum without bloodshed. Then people marched over mathematics. Great armies were assembled’, and he ‘never envisaged it would be as complex as it turned out to be’. Bacon, the official responsible for the NC, said that Baker ‘wasn’t interested in the nitty gritty’. Nicholas Tate (who was at the NCC and later headed the QCA) said that Baker was ‘affable but remote. He didn’t trouble his mind with attainment targets. He was resting on his laurels.’ Hancock, his Permanent Secretary, said that ‘after 1987 he became increasingly arrogant and impatient’. In 1989, Baker was moved to Party Chairman leaving behind chaos for his successor.

According to his colleagues, Baker was obsessed with the media, he did not try to understand (and did not have the training to understand) the policy issues in detail, and he confused the showmanship necessary to get a bill passed with serious management – he described himself as ‘a doer’ but the ‘doing’ in his mind consisted of legislation and spin. He did not even understand that there were strong disputes among teachers, subject bodies, and educationalists about the content of the NC – never mind what to do about these disputes. (Having watched the UTC programme from the DfE, the same traits were much in evidence thirty years later.)

Baker’s legacy 1989 – 1997: Shambles

Baker’s memoirs do not mention the report of The Task Group on Assessment (TGAT), chaired by Professor Paul Black, commissioned by Baker in 1987 to report on how the NC could be assessed. The plan was very complicated with ten levels of attainment having to be defined for each subject. Thatcher hated it and criticised Baker for accepting it. Meanwhile the Higginson Report had recommended replacing A Levels with some sort of IB type system. Bacon said that ‘the political trade-off was Higginson got ditched … and we got TGAT. In retrospect it may have been the wrong trade off.’

MacGregor could not get a grip of the complexity. He did not even hire a specialist policy adviser because, he said, ‘I didn’t feel I needed one.’ He blamed Baker for the chaos who, he said, ‘hadn’t spent enough time thinking about who was appointed to the bodies. He left it to officials and didn’t think through what he wanted the bodies to do. For the first year I was unable to replace anybody.’ The chairman of NCC described how they used ‘magic words to appease the right’ and get through what they wanted. The officials who controlled SEAC stopped the simplification that Thatcher wanted using the ‘legal advice’ card, claiming that the 1988 Act required testing of all attainment targets. (I had to deal with the same argument 25 years later.) MacGregor was trapped. He had an unworkable system and was under contradictory pressure from Thatcher to simplify everything and from Baker to maintain what he had promised.

Clarke bluffed and bullied his way through 18 months without solving the problems. His Permanent Secretary described the trick of getting Clarke to do what officials wanted: ‘The trick was to never box him into a corner… Show him where there was a door but never look at that door, and never let on you noticed when he walked through.’ Like MacGregor, Clarke blamed Baker for the shambles: ‘[Baker] had set up all these bloody specialist committees to guide the curriculum, he’d set up quango staff who as far as I could see had come out of the Inner London Education Authority the lot of them.’ Clarke solved none of the main problems with the tests, antagonised everybody, and replaced HMI with Ofsted.

After his surprise win, Major told the Tory Conference in 1992, ‘Yes it will mean another colossal row with the education establishment. I look forward to that.’ Patten soon imploded, the unions went for the jugular over the introduction of SATs, and by the end of 1993 Number Ten had backtracked on their bellicose spin and was in full retreat with a review by Dearing (published 1994). Suddenly, the legal advice that had supposedly prevented any simplification was rethought and officials told Dearing that the legal advice did allow simplification after all: ‘our advice is that the primary legislation allows a significant measure of flexibility’. (In my experience, one of the constants of Whitehall is that legal advice tends to shift according to what powerful officials want.) Dearing produced a classic Whitehall fudge that got everybody out of the immediate crisis but did not even try to deal with the fundamental problems, thus pushing the problems into the future.

The historian Robert Skidelsky, helping SEAC, told Patten ‘these tests will not run’ and he should change course but Patten shouted ‘That is defeatist talk.’ Skidelsky decided to work out a radically simpler model than the TGAT system with a small group in SEAC: ‘We pushed the model through committee and through the Council and sent it off to John Patten. We never received a reply. Six months after I resigned Emily Blatch approached me and said she had been looking for my paper on Assessment but no one seems to know where it is.’

Patten was finished. Gillian Shephard was put in to be friendly to the unions and quiet the chaos. Soon she and Major had also fallen out and the cycle of briefing and counter-briefing against Number Ten returned with permanent policy chaos. One of her senior officials, Clive Saville, concluded that ‘There was a great intellectual superficiality about Gillian Shephard and she was as intellectually dishonest as Shirley Williams. She was someone who wanted to be liked but wasn’t up to the job.’

A few thoughts on the process

The Government had introduced a new NC and test system and replaced O Levels with GCSEs. (They also introduced new vocational qualifications (NVQs) described by Professor Alan Smithers as a ‘disaster of epic proportions … utterly lightweight’.) The process was a disastrous bungle from start to finish.

Thatcher deserves considerable blame. She allowed Baker to go ahead with fundamental reforms without any agreed aims or a detailed roadmap. She knew, as did Lawson, that Baker could not cope with details yet appointed him on the basis of ‘presentational flair’ (media obsession is often confused with ‘presentational flair’).

The best book I have read by someone who has worked in Number Ten and seen why the Whitehall architecture is dysfunctional is John Hoskyns’ Just In Time. Extremely unusually for someone in a senior position in No10, Hoskyns both had an intellectual understanding of complex systems and was a successful manager. Inevitably, he was appalled at how the most important decisions were made and left Number Ten after failing to persuade Thatcher to tear up the civil service system. Since then, everybody in Number Ten has been struggling with the same issues. (If she had taken his advice history might have been extremely different – e.g. no ERM debacle.) His conclusion on Thatcher was:

‘The conclusion that I am coming to is that the way in which [Thatcher] herself operates, the way her fire is at present consumed, the lack of a methodical mode of working and the similar lack of orderly discussion and communication on key issues, means that our chance of implementing a carefully worked out strategy – both policy and communications – is very low indeed… Difficult problems are only solved – if they can be solved at all – by people who desperately want to solve them… I am convinced that the people and the organisation are wrong.’ (Emphasis added.)

Arguably the person who knowingly appoints someone like Baker is more to blame for the failings of Baker than Baker is himself. Major and the string of ministers that followed Baker were doomed. They were not unusually bad – they were representative examples of those at the apex of the political process. They did not know how to go about deciding aims, means, and operations. They were obsessed with media management and therefore continually botched the policy and implementation. They could not control their officials. They could not agree a plan and blamed each other. If they were the sort of people who could have got out of the mess, then they were the sort of people who would not have got into the mess in the first place.

Officials over-complicated everything and, like ministers, did not engage seriously with the core issue – what should pupils of different abilities be doing and how can we establish a process where we can collect reliable information. The process was dominated by the same attitude on all sides – how to impose a mentality already fixed.

It was also clearly affected by another element that has contemporary relevance – the constant churn of people. Just between summer 1989 and the end of 1992, there was: a new Permanent Secretary in May 1989, a new SoS in July 1989 (MacGregor), another new SoS in November 1990 (Clarke), a new PM and No10 team (Major), new heads for the NCC and SEAC in July 1991, then another new SoS in spring 1992 (Patten) and another new Permanent Secretary. Everybody blamed problems on predecessors and nobody could establish a consistent path.

Even its own Permanent Secretaries later attacked the DES. James Hamilton (1976-1983) was put into DES in June 1976 from the Cabinet Office to help with the Ruskin agenda and found a place where ‘when something was proposed someone would inevitably say, “Oh we tried that back in whenever and it didn’t work”…’. Geoffrey Holland (1992-3) admitted that, ‘It [DES] simply had no idea of how to get anything off the ground. It was lacking in any understanding or experience of actually making things happen.’

A central irony of the story shows how dysfunctional the system was. Thatcher never wanted a big NC and a complicated testing system but she got one. As some of her ideological opponents in the bureaucracy tried to simplify things when it was clear Baker’s original structure was a disaster, ministers were often fighting with them to preserve a complex system that could not work and which Thatcher had never wanted. This sums up the basic problem – a very disruptive process was embarked upon without the main players agreeing what the goal was.

Although the think tanks were much more influential in this period than they are now, Ferdinand Mount, head of Thatcher’s Policy Unit, made a telling point about their limitations: ‘Enthusiasts for reform at the IEA and the CPS were prodigal with committees and pamphlets but were much less helpful when it came to providing practical options for action. This made it difficult for the Policy Unit’s ideas to overcome the objections put forward by senior officials’. Thirty years later this remains true. Think tanks put out reports but they rarely provide a detailed roadmap that could help people navigate such reforms through the bureaucracy and few people in think tanks really understand how Whitehall works. This greatly limits their real influence. This is connected to a wider point. Few of those who comment prominently on education (or other) policy understand how Whitehall works, hence there is a huge gap between discussions of ideal policy and what is actually possible within a certain timeframe in the existing system, and commentators think that all sorts of things that happen do so because of ministers’ wishes, confusing public debate further.

I won’t go into the post-1997 story. There are various books that tell this whole story in detail. The National Curriculum remained but was altered; the test system remained but gradually narrowed from the original vision; there were some attempts at another major transformation (such as Tomlinson’s attempt to end A Levels, thwarted by Blair) but none took off; money poured into the school system and its accompanying bureaucracy at an unprecedented rate but, other than a large growth in the number and salaries of everybody, it remained unclear what if any progress was being made.

This bureaucracy spent a great deal of taxpayers’ money promoting concepts such as ‘learning styles’ and ‘multiple intelligences’ that have no proper scientific basis but which nevertheless were successfully blended with old ideas from Vygotsky and Piaget to dominate a great deal of teacher training. A lot of people in the education world got paid an awful lot of money (Hargreaves, Waters et al) but what happened to standards?

(The quotes above are taken mainly from Daniel Callaghan’s Conservative Party Education Policies 1976-1997.)

B. The cascading effects of GCSEs and the National Curriculum

Below I consider 1) the data on grade inflation in GCSEs and A Levels, 2) various studies from learned societies and others that throw light on the issue, 3) knock-on effects in universities.

1. Data on grade inflation in GCSEs and A Levels

We do not have an official benchmark against which to compare GCSE results. The picture is therefore necessarily hazy. As Coe has written, ‘we are limited by the fact that in England there has been no systematic, rigorous collection of high-quality data on attainment that could answer the question about systemic changes in standards.’ This is one of the reasons why in 2013 we, supported by Coe and others, pushed through (against considerable opposition including academics at the Institute of Education) a new ‘national reference test’ in English and maths at age 16, which I will return to in a later blog.

However, we can compare the improvement in GCSE results with a) results from international tests and b) consistent domestic tests uncontrolled by Whitehall.

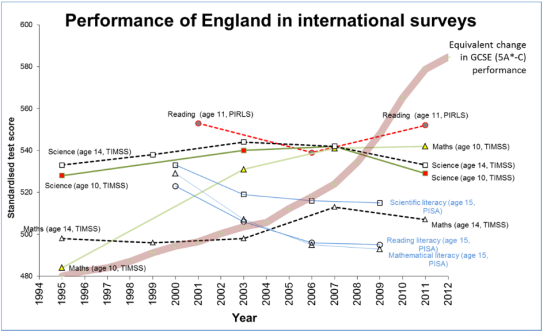

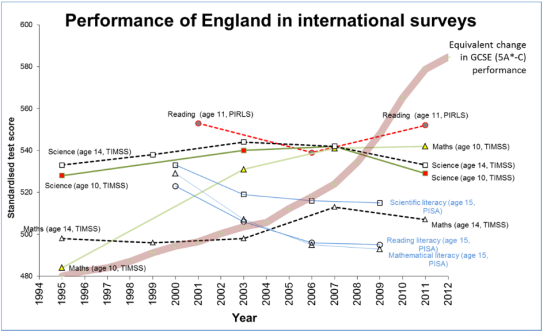

The first two graphs below show the results of this comparison.

Chart 1: Comparison of English performance in international surveys versus GCSE scores 1995-2012 (Coe)

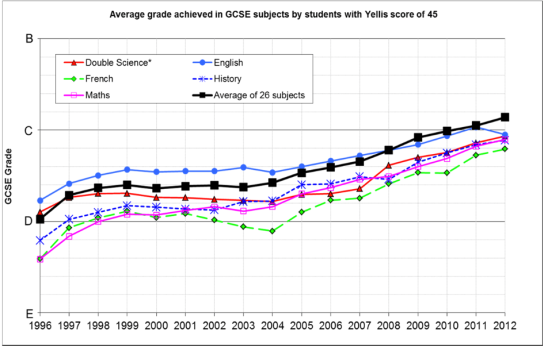

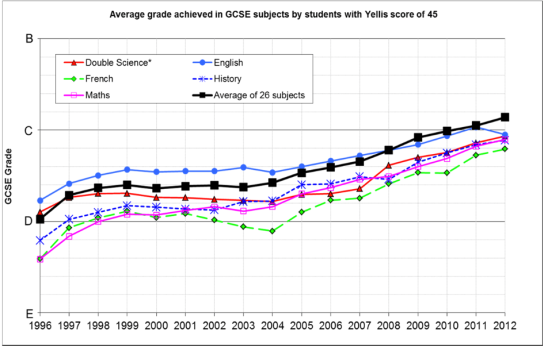

Chart 2: GCSE grades achieved by candidates with same maths & vocab scores each year 1996-2012 (Coe)

Professor Coe writes of Chart 1:

‘When GCSE was introduced in 1987 [I think he must mean 1988 as that was the first year of GCSEs or else he means ‘the year before GCSEs were first taken’], 26.4% of the cohort achieved five grade Cs or better. By 2012 the proportion had risen to 81.1%. This increase is equivalent to a standardised effect size of 1.63, 3 or 163 points on the PISA scale… If we limit the period to 1995 – 2011 [as in Chart 1 above] the rise (from 44% to 80% 5A*-C) is equivalent to 99 points on the PISA scale [as superimposed on Chart 1]… [T]he two sets of data [international and GCSEs] tell stories that are not remotely compatible. Even half the improvement that is entailed in the rise in GCSE performance would have lifted England from being an average performing OECD country to being comfortably the best in the world. To have doubled that rise in 16 years is just not believable…

‘The question, therefore, is not whether there has been grade inflation, but how much…’ [Emphasis added.] (Professor Robert Coe, ‘Improving education: a triumph of hope over experience‘, 18 June 2013, p. vi.)

Chart 2 plots the improving GCSE grades achieved by pupils scoring the same each year in a test of maths and vocabulary: pupils scoring the same on YELLIS get higher and higher GCSE grades as time passes. Coe concludes that although ‘it is not straightforward to interpret the rise in grades … as grade inflation’, the YELLIS data ‘does suggest that whatever improved grades may indicate, they do not correspond with improved performance in a fixed test of maths and vocabulary’ (Coe, ibid).

This YELLIS comparison suggests that in 2012 pupils received a grade higher in maths, history, and French GCSE, and almost a grade higher in English, than students of the same ability in 1996.

It is important to note that neither of Coe’s charts or measurements include the effects of either a) the initial switch from O Level to GCSE or b) what changed with GCSEs from 1988 – 1995.

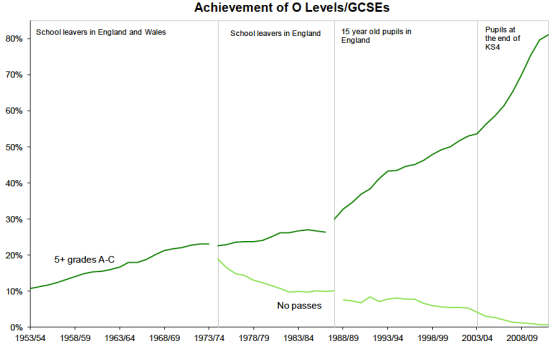

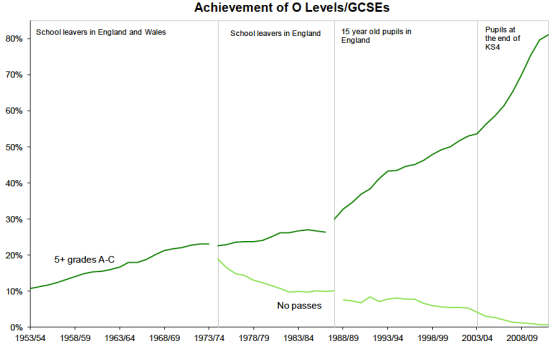

The next two charts show this earlier part of the story (both come from Education: Historical statistics, House of Commons, November 2012). NB. they have different end dates.

Chart 3: Proportion getting 5 O Levels / GCSEs at grade C or higher 1953/4 – 2008/9

Chart 4: Proportion getting 1+ or 3+ passes at A Level 1953/4 – 1998/9

Chart 3 shows that the period 1988-95 saw an even sharper increase in GCSE scores than post-1995 so a GCSE/YELLIS style comparison that included the years 1988-1995 would make the picture even more dramatic.

Chart 4 shows a dramatic increase in A Level passes after the introduction of GCSEs. One interpretation of this graph, supported by the 1997-2010 Government and teaching unions, is that this increase reflected large real improvements in school standards.

There is GCSE data that those who believe this argument could cite. In 1988, 8% of GCSEs were awarded an ‘A’ in GCSE. In 2011, 23% of GCSEs were awarded an ‘A’ or ‘A*’ in GCSE. The DfE published data in 2013 which showed that the number of pupils with ten or more A* grades trebled 2002-12. This implies a very large increase in the numbers of those excelling at GCSE, which is consistent with a picture of a positive knock-on effect on improving A Level results.

However, we have already seen that the claims for GCSEs are ‘not believable’ in Coe’s words. It also seems prima facie very unlikely that a sudden large improvement in A Level results from 1990 could be the result of immediate improvements in learning driven by GCSEs. There is also evidence for A Levels similar to the GCSE/YELLIS comparison.

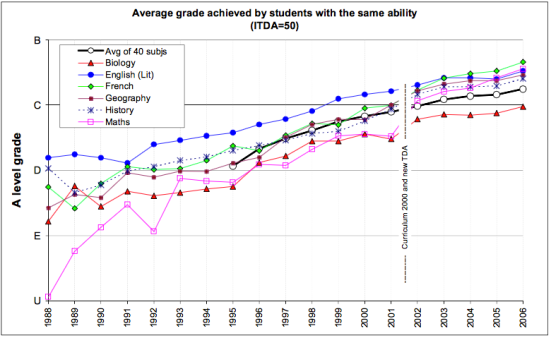

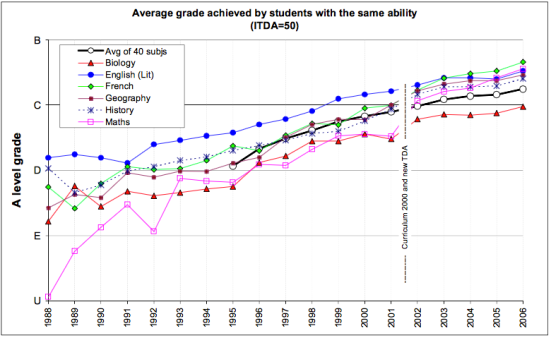

Chart 5: A level grades of candidates having the same TDA score (1988-2006)

Chart 5 plots A Level grades in different subjects against the international TDA test. As with GCSEs, this shows that pupils scoring the same in a non-government test got increasingly higher grades in A Levels. The change in maths is particularly dramatic from an ‘Unclassified’ mark in 1988 to a B/C in 2006.

What we know about GCSEs combined with this information makes it very hard to believe that the sudden dramatic increase in A Level performance since 1990 is because of real improvements and suggests another interpretation: these dramatic increases in A Level results reflected (mostly or entirely) A Levels being made significantly easier probably in order to compensate for GCSEs being much easier.

However, the data above can only tell part of the story. Logically, it is hard or impossible to distinguish between possible causes just from these sorts of comparisons. For example, perhaps someone might claim that A Level questions remained as challenging as before but grade boundaries moved – i.e. the exam papers were the same but the marking was easier. I think this is prima facie unlikely but the point is that logically the data above cannot distinguish between various possible dynamics.

Below is a collection of studies, reports, and comments from experts that I have accumulated over the past few years that throws light on which interpretation is more reasonable. Please add others in Comments.

(NB. David Spiegelhalter, a Professor of Statistics at Cambridge, has written about problems with PISA’s use of statistics. These arguments are technical. To a non-specialist like me, he seems to make important points that PISA must answer to retain credibility and the fact that it has not (as of the last time I spoke to DS in summer 2014) is a blot on its copybook. However, I do not think they materially affect the discussion above. Other international tests conducted on different bases all tell roughly the same story. I will ask DS if he thinks his arguments do undermine the story above and post his reply if any.)

2. Studies 2007 – now

NB1. Most of these studies are comparing changes over the past decade or so, not the period since the introduction of the NC and GCSEs in the 1980s.

NB2. I will reserve detailed discussion of the AS/A2/decoupling argument for a later blog as it fits better in the ‘post-2010 reforms’ section.

Learned societies. The Royal Society’s 2011 study of Science GCSEs: ‘the question types used provided insufficient opportunity for more able candidates … to demonstrate the extent of their scientific knowledge, understanding and skills. The question types restricted the range of responses that candidates could provide. There was little or no scope for them to demonstrate various aspects of the Assessment Objectives and grade descriptions… [T]he use of mathematics in science was examined in a very limited way.’ SCORE also published (2012) evidence on science GCSEs which reported ‘a wide variation in the amount of mathematics assessed across awarding organisations and confirmed that the use of mathematics within the context of science was examined in a very limited way. SCORE organisations felt that this was unacceptable.’

The 2012 SCORE report and Nuffield Report showed serious problems with the mathematical content of A Levels. SCORE was very critical:

‘For biology, chemistry and physics, it was felt there were underpinning areas of mathematics missing from the requirements and that their exclusion meant students were not adequately prepared for progression in that subject. For example, for physics many of the respondents highlighted the absence of calculus, differentiation and integration, in chemistry the absence of calculus and in biology, converting between different units… For biology, chemistry and physics, the analysis showed that the mathematical requirements that were assessed concentrated on a small number of areas (e.g. numerical manipulation) while many other areas were assessed in a limited way, or not at all… Survey respondents were asked to identify content areas from the mathematical requirements that should feature highly in assessments. In most cases, the biology, chemistry and physics respondents identified mathematical content areas that were hardly or not at all assessed by the awarding organisations.

‘[T]he inclusion of more in-depth problem solving would allow students to apply their knowledge and understanding in unstructured problems and would increase their fluency in mathematics within a science context.’

‘The current mathematical assessments in science A-levels do not accurately reflect the mathematical requirements of the sciences. The findings show that a large number of mathematical requirements listed in the biology, chemistry and physics specifications are assessed in a limited way or not at all within these papers. The mathematical requirements that are assessed are covered repeatedly and often at a lower level of difficulty than required for progression into higher education and employment. It has also highlighted a disparity between awarding organisations in their assessment of the use of mathematics within biology, chemistry and physics A-level. This is unacceptable and the examination system, regardless of the number of awarding organisations, must ensure the assessments provide an authentic representation of the subject and equip all students with the necessary skills to progress in the sciences.

‘This is likely to have an impact on the way that the subjects are taught and therefore on students’ ability to progress effectively to STEM higher education and employment.’ SCORE, 2012. Emphasis added.

The 2011 Institute of Physics report showed strong criticism from university academics of the state of physics and engineering undergraduates’ mathematical knowledge. Four-fifth of academics said that university courses had changed to deal with a lack of mathematical fluency and 92% said that a lack of mathematical fluency was a major obstacle.

‘The responses focused around mathematical content having to be diluted, or introduced more slowly, which subsequently impacts on both the depth of understanding of students, and the amount of material/topics that can be covered throughout the course…

‘Academics perceived a lack of crossover between mathematics and physics at A-level, which was felt to not only leave students unprepared for the amount of mathematics in physics, but also led to them not applying their mathematical knowledge to their learning of physics and engineering.’ IOP, 2011.

The 2011 Centre for Bioscience criticised Biology and Chemistry A Levels and preparation of pupils for bioscience degrees: ‘very many lack even the basics… [M]any students do not begin to attempt quantitative problems and this applies equally to those with A level maths as it does to those with C at GCSE. A lack of mathematics content in A level Biology means that students do not expect to encounter maths at undergraduate level. There needs to be a more significant mathematical component in A level biology and chemistry.’ The Royal Society of Chemistry report, The five decade challenge (2008), said there had been ‘catastrophic slippage in school science standards’ and that Government claims about improving GCSE scores were ‘an illusion’. (The Department said of the RSC report, ‘Standards in science have improved year on year thanks to 10 years of sustained investment and improvement in teaching and the education system – this is something we should celebrate, not criticise. Times have changed.’)

Ofqual, 2012. Ofqual’s Standards Review in 2012 found grade inflation in both GCSE and A-levels between 2001-03 and 2008-10: ‘Many of these reviews raise concerns about the maintenance of standards… In the GCSEs we reviewed (biology, chemistry and mathematics) we found that changes to the structure of the assessments, rather than changes to the content, reduced the demand of some qualifications.’

On A-levels, ‘In general we found that changes to the way the content was assessed had an impact on demand, in many cases reducing it. In two of the reviews (biology and chemistry) the specifications were the same for both years. We found that the demand in 2008 was lower than in 2003, usually because the structure of the assessments had changed. Often there were more short answer, structured questions’ (Ofqual, Standards Reviews – A Summary, 1 May 2012, found here).

Chief Executive of Ofqual, Glenys Stacey, has said: ‘If you look at the history, we have seen persistent grade inflation for these key qualifications for at least a decade… The grade inflation we have seen is virtually impossible to justify and it has done more than anything, in my view, to undermine confidence in the value of those qualifications’ (Sunday Telegraph, 28 April 2012).

The OECD’s International Survey of Adult Skills (October 2013). This assessed numeracy, literacy and computing skills of 16-24-year-olds. The tests were done over 2011/2012. England was 22nd out of 24 for literacy, 21st out of 24 for numeracy, and is 16th out of 20 for ‘problem solving in a technology-rich environment’.

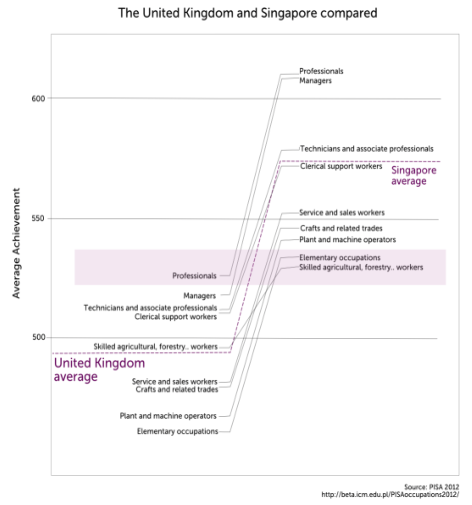

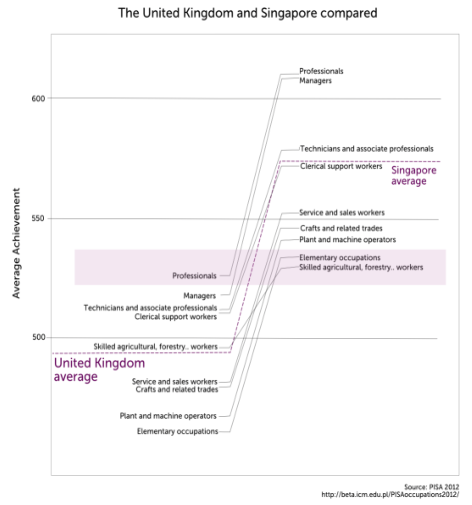

PISA 2012. The normal school PISA tests taken in 2012 (reported 2013) showed no significant change between 2009-12. England was 21st for science, 23rd for reading, and 26th for mathematics. A 2011 OECD report concluded: ‘Official test scores and grades in England show systematically and significantly better performance than international and independent tests… [Official results] show significant increases in quality over time, while the measures based on cognitive tests not used for grading show declines or minimal improvements’ (OECD Economic Surveys: United Kingdom, 16 March 2011, p. 88-89). This interesting chart shows that in the PISA maths test the children of English professionals perform the same as children of Singapore cleaners (Do parents’ occupations have an impact on student performance?, PISA 2014).

Chart 6: Comparing pupil maths scores by parent occupation, UK (left) and Singapore (right) maths skills (PISA 2012)

TIMMS/PIRLS. The TIMMS/PIRLS tests (taken summer 2011, reported December 2012) told a similar story to PISA. England’s score in reading at age 10 increased since 2006 by a statistically significant amount. England’s score in science at age 10 decreased since 2007 by a statistically significant amount. England’s scores in science at age 14 and mathematics at ages 10 and 14 showed no statistically significant changes since 2007. (According to experts, the PISA maths test relies more on language comprehension than TIMMS which is supposedly why Finland scores higher in the former than the latter.)

National Numeracy (February 2012). Research showed that in 2011 only a fifth of the adult population had mathematical skills equivalent to a ‘C’ in GCSE, down a few percent from the last survey in 2003. About half of 16-65 year olds have at best the mathematical skills of an 11 year-old. A fifth of adults will struggle with understanding price labels on food and half ‘may not be able to check the pay and deductions on a wage slip.’

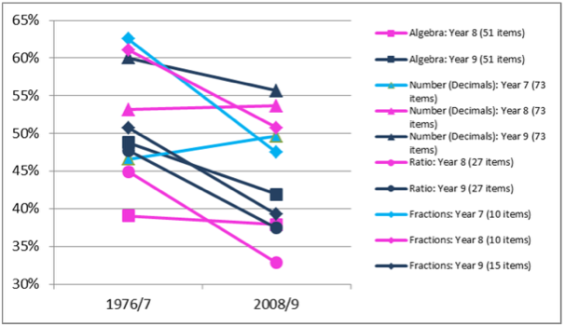

King’s College, 2009. A major study by academics from King’s College London and Durham University found that basic skills in maths have declined since the 1970s. In 2008, less than a fifth of 14 year-olds could write 11/10 as a decimal. In the early 1980s, only 22 per cent of pupils obtained a GCE O-level grade C or above in maths. In 2008, over 55 per cent gained a GCSE grade C or above in the subject (King’s College London/University of Durham, ‘Secondary students’ understanding of mathematics 30 years on‘, 5 September 2009, found here).

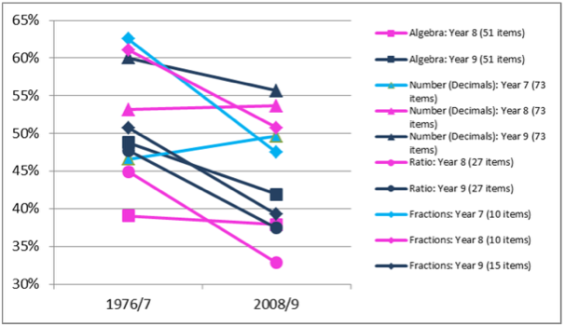

Chart 7: Performance on ICCAMS / CSMS Maths tests showing declines over time

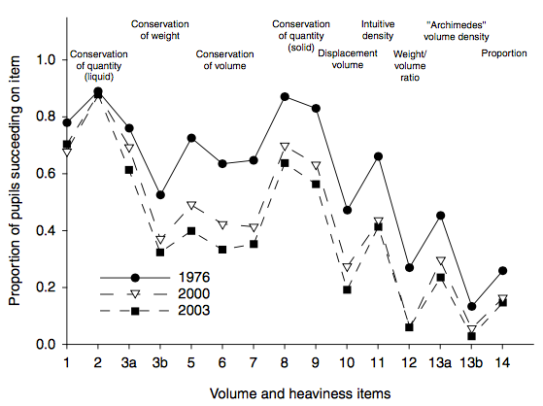

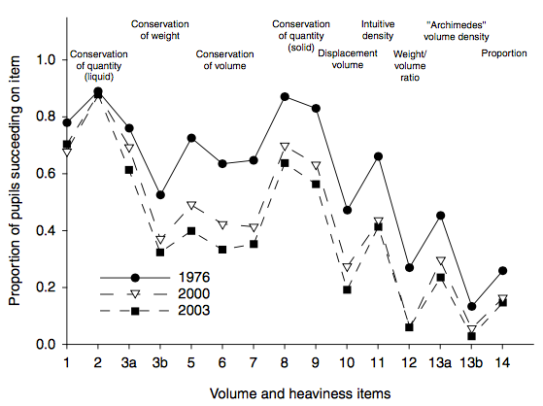

Shayer et al (2007) found that performance in a test of basic scientific concepts fell significantly between 1976 and 2003. ‘[A]lthough both boys and girls have shown great drops in performance, the relative drop is greater for boys… It makes it difficult to believe in the validity of the year on year improvements reported nationally on Key Stage 3 NCTs in science and mathematics: if children are entering secondary from primary school less and less equipped with the necessary mental conditions for processing science and mathematics concepts it seems unlikely that the next 2.5 years KS3 teaching will have improved so much as more than to compensate for what students of today lack in comparison with 1976.’

Chart 8: Performance on tests of scientific concepts, 1976 – 2003 (Shayer)

Tymms (2007) reviewed assessment evidence in mathematics from children at the end of primary school between 1978 and 2004 and in reading between 1948 and 2004. The conclusion was that standards in both subjects ‘have remained fairly constant’.

Warner (2013) on physics. Professor Mark Warner (Cambridge University) produced a fascinating report (2013) on problems with GCSE and A Level Physics and compared the papers to old O Levels, A Levels, ‘S’ Level papers, Oxbridge entry exams, international exams and so on. After reading it, there is no room for doubt. The standards demanded in GCSEs and A Levels have fallen very significantly.

‘[In modern papers] small steps are spelt out so that not more than one thing needs to be addressed before the candidate is set firmly on the right path again. Nearly all effort is spent injecting numbers into formulae that at most require GCSE-level rearrangements… All diagrams are provided… 1986 O-level … [is] certainly more difficult than the AS sample… 1988 A-level … [is] harder than most Cambridge entrance questions currently… 1983 Common Entrance [is] remarkably demanding for this age group, approaching the challenge of current AS… There is a staggering difference in the demands put on candidates… Exams [from the 1980s] much lower down the school system are in effect more difficult than exams given now in the penultimate years [i.e. AS].’

For example, the mechanics problems in GCSE Physics are substantially shallower than those in 1980s O Level, which examined concepts now in A Level. The removal of calculus from A Level physics badly undermined it. Calculus is tested in A Level Maths’ Mechanics I paper and Mechanics II and III test deeper material than Physics A Level. This is one of the reasons why Cambridge Physics department stopped requiring Physics A Level for entry and made clear that Further Maths A Level is acceptable instead (many say it is better preparation for university than physics A Level is).

Warner also makes the point that making Physics GCSE and A Level much easier did not even increase the number taking physics degrees, which has declined sharply since the mid-1980s. He concludes: ‘one could again aim for a school system to get a sizable fraction of pupils to manage exams of these [older] standards. Children are not intrinsically unable to attack such problems.’ (NB. The version of this report on the web is not the full version – I would urge those interested to email Professor Warner.)

Gowers (2012) on maths. Tim Gowers, Cambridge professor and Fields Medallist, described some problems with Maths A Level and concluded:

‘The general point here is of course that A-levels have got easier [emphasis added] and schools have a natural tendency to teach to the test. If just one of those were true, it would be far less of a problem. I would have nothing against an easy A-level if people who were clever enough were given a much deeper understanding than the exam strictly required (though as I’ve argued above, for many people teaching to the test is misguided even on its own terms, since they will do a lot better on the exam if they have not been confined to what’s on the test), and I would not be too against teaching to the test if the test was hard enough…

‘[S]ome exams, such as GCSE maths, are very very easy for some people, such as anybody who ends up reading mathematics at Cambridge (but not just those people by any means). I therefore think that the way to teach people in top sets at schools is not to work towards those exams but just to teach them maths at the pace they can manage.’

Durham University analysis gives data to quantify this conclusion. Pupils who would have received a U (unclassified) in Maths A-Level in 1988 received a B/C in 2006 – see above for Chart 5 showing this (CEM Centre Durham University, Changes in standards at GCSE and A-Level: Evidence from ALIS and YELLIS, April 2007). Further Maths A Level is supposedly the toughest A Level and probably it is but a) it is not the same as its 1980s ancestor and b) it now introduces pupils to material such as matrices that used to be taught in good prep schools.

I spent a lot of time 2007-14 talking to maths dons, including heads of departments, across England. The reason I quote Gowers is that I never heard anybody dispute his conclusion but he was almost the only one who would say it publicly. I heard essentially the same litany about A Level maths from everybody I spoke to: although there were differences of emphasis, nobody disputed these basic propositions. 1) The questions became much more structured so pupils are led up a scaffolding with less requirement for independent problem-solving. 2) The emphasis moved to memorising some basic techniques the choice of which is clearly signalled in the question. 3) The modular system a) encouraged a ‘memorise, regurgitate, forget’ mentality and b) undermined learning about how different topics connect across maths, both of which are bad preparation for further studies. (There are also some advantages to a modular system that I will return to.) 4) Many undergraduates, including even those in the top 5% at such prestigious universities as Imperial, therefore now struggle in their first year as they are not well-prepared by A Level for the sort of problems they are given in undergraduate study. (The maths department at Imperial became so sick of A Level’s failings that they recently sought and got approval to buy Oxford’s entrance exam for use in their admission system.)

I will not go into arguments about vocational qualifications here but note the conclusion of Alison Wolf whose 2011 report on this was not disputed by any of the three main parties:

‘The staple offer for between a quarter and a third of the post- 16 cohort is a diet of low-level vocational qualifications, most of which have little to no labour market value.’

3. Knock-on effects in universities

Serious lack of maths skills

There are many serious problems with maths skills. Part of the reason is that many universities do not even demand A Level maths. The result? As of about 2010-12, about 20% of Engineering undergraduates, about 40% of Chemistry and Economics undergraduates, and about 60-70% of Biology and Computer Science undergraduates did not have A Level Maths. Less than 10% of undergraduate bioscience degree courses demand A Level Maths therefore ‘problems with basic numeracy are evident and this reflects the fact that many students have grades less than A at GCSE Maths. These students are unlikely to be able to carry out many of the basic mathematical approaches, for example unable to manipulate scientific notation with negative powers so commonly used in biology’ (2011 Biosciences report). (I think that history undergraduates should be able to manipulate scientific notation with negative powers – this is one of the many things that should be standard for reasonably able people.)

The Royal Society estimated (Mathematical Needs, 2012) that about 300,000 per year need a post-GCSE Maths course but only ~100,000 do one. (This may change thanks to Core Maths starting in 2015, see later blog.) This House of Lords report (2012) on Higher Education in STEM subjects concluded: ‘We are concerned that … the level at which the subject [maths] is taught does not meet the requirements needed to study STEM subjects at undergraduate level… [W]e urge HEIs to introduce more demanding maths requirement for admissions into STEM courses as the lack, or low level, of maths requirements at entry acts as a disincentive for pupils to study maths and high level maths at A level.’ House of Lords Select Committee on Science and Technology, Higher Education in STEM subjects, 2012.

Further, though this subject is beyond the scope of this blog, it is also important that the maths PhD pipeline ‘which was already badly malfunctioning has been seriously damaged by EPSRC decisions’, including withdrawal of funding from non-statistics subjects which drew the ire of UK Fields Medallists, cf. Submission by the Council for the Mathematical Sciences to the House of Lords, 2011. The weaknesses in biology also feed into the bioscience pipeline: only six percent of bioscience academics think their graduates are well prepared for a masters in the fast-growing field of Computational Biology (p.8 of report).

Closing of language departments, decline of language skills

I have not found official stats for this but according to research done for the Guardian (with FOIs):

‘The number of universities offering degrees in the worst affected subject, German, has halved over the past 15 years. There are 40% fewer institutions where it is possible to study French on its own or with another language, while Italian is down 23% and Spanish is down 22%.’

As Katrin Kohl, professor of German at Jesus College (Oxford) has said, ‘The UK has in recent years been systematically squandering its already poor linguistic resources.’ Dawn Marley, senior lecturer in French at the University of Surrey, summarised problems across languages:

‘We regularly see high-achieving A-level students who have only a minimal knowledge of the country or countries where the language of study is spoken, or who have limited understanding of how the language works. Students often have little knowledge of key elements in a country’s history – such as the French Revolution, or the fact that France is a republic. They also continue to struggle with grammatical accuracy, and use English structures when writing in the language they are studying… The proposals for the revival of A-level are directly in line with what most, if not all, academics in language departments would see as essential.’ (Emphasis added.)

The same picture applies to classical languages. Already by 1994 the Oxford Classics department was removing texts such as Thucydides as compulsory elements in ‘Greats’ because they were deemed ‘too hard’. These changes continued and have made Classics a very different subject than it was before 1990. At Oxford, they introduced whole new courses (Mods B then Mods C) that do not require any prior study of the ancient languages themselves. The first year of Greats now involves remedial language courses.

I quote at length from a paper by John Davie, a Lecturer in Classics at Trinity College, Oxford, as his comments summarise the views of other senior classicists in Oxbridge and elsewhere who have been reluctant to speak out (In Pursuit of Excellence, Davie, 2013). Inevitably, the problems described are damaging the pipeline for masters, PhDs, and future scholarship.

‘Classics as an academic subject has lost much of its intellectual force in recent years. This is true not only of schools but also, inevitably, of universities, which are increasingly required to adapt to the lowering of standards…

‘In modernist courses…, there is (deliberately) no systematic learning of grammar or syntax, and emphasis is laid on fast reading of a dramatic continuous story in made-up Latin which gives scope for looking at aspects of ancient life. The principle of osmosis underlying this approach, whereby children will learn linguistic forms by constant exposure to them, aroused scepticism among many teachers and has been thoroughly discredited by experts in linguistics. Grammar and syntax learned in this piecemeal fashion give pupils no sense of structure and, crucially, deny them practice in logical analysis, a fundamental skill provided by Classics…

‘[W]e have, in GCSE, an exam that insults the intelligence… Recent changes to this exam have by general consent among teachers made the papers even easier.

‘In the AS exam currently taken at the end of the first year of A-level … students study two small passages of literature, which represent barely a third of an original text. They are asked questions so straightforward as to verge on the banal and the emphasis is on following a prescribed technique of answering, as at GCSE. Imagination and independent thought are simply squeezed out of this process as teachers practise exam-answering technique in accordance with the narrow criteria imposed on examiners.

‘The level of difficulty [in AS] is not substantially higher than that of GCSE, and yet this is the exam whose grades and marks are consulted by the universities when they are trying to determine the ability of candidates… Having learned the translation of these bite-sized chunks of literature with little awareness of their context or the wider picture (as at GCSE, it is increasingly the case that pupils are incapable of working out the Latin/Greek text for themselves, and so lean heavily on a supplied translation), they approach the university interview with little or no ability to think “outside the box”. Dons at Oxford and Cambridge regularly encounter a lack of independent thought and a tendency to fall back on generalisations that betray insufficient background reading or even basic curiosity about the subject. This need not be the case and is clearly the product of setting the bar too low for these young people at school…

‘At A2 … students read less than a third of a literary text they would formerly have read in its entirety.

‘There is the added problem that young teachers entering the profession are themselves products of the modernist approach and so not wholly in command of the classical languages themselves. As a result they welcome the fact that they are not required by the present system to give their pupils a thorough grounding in the language, embracing the less rigorous approach of modern course-books with some relief.

‘In the majority of British universities Classics in its traditional form has either disappeared altogether or has been replaced by a course which presents the literature, history and philosophy mainly (or entirely) in translation, i.e. less a degree course in Classics than in Classical Civilisation.

‘This situation has been forced upon university departments of Classics by the impoverished language skills of young people coming up from schools… It is not only the classical languages but English itself which has suffered in this way in the last few decades. Every university teacher of the classical languages knows that he cannot assume familiarity with the grammar and syntax of English itself, and that he will have to teach from scratch such concepts as an indirect object, punctuation or how a participle differs from a gerund…

‘Even at Oxford cuts have been made to the number of texts students are required to read and, in those texts that remain, not as many lines are prescribed for reading in the original Latin or Greek.

‘In the last ten years of teaching for Mods [at Oxford] I have been struck by how the first-year students who come my way at the start of the summer term appear to know less about the classical languages each year, an experience I know to be shared by dons at other colleges…

‘GCSE should be replaced by a modern version of the O-level that stretches pupils… This would make the present AS exam completely unsuitable, and either a more challenging set of papers should be devised, if the universities wish to continue with pre A-level interviewing, or there should be a return to an unexamined year of wide reading before the specialisation of the last year.

‘Although the present exam, A2, has more to recommend it than AS, it also would no longer be fit for purpose and would need strengthening. As part of both final years there should be regular practice in the writing of essays, a skill that has been largely lost in recent years because of the exam system and is (rightly) much missed by dons.’

This combination of problems explains why we funded a project with Professor Pelling, Regius Professor of Greek at Oxford, to fund teacher training and language enrichment courses for schools.

I will not go into other humanities subjects. I read Ancient & Modern History and have thoughts about it but I do not know of any good evidence similar to the reports quoted above by the likes of the Royal Society. I have spoken to many university teachers. Some, such as Professor Richard Evans (Cambridge) told me they think the standard of those who arrive as undergraduates is roughly the same as twenty years ago. Others at Oxbridge and elsewhere told me they think that essay writing skills have deteriorated because of changes to A Level (disputed by Evans and others) and that language skills among historians have deteriorated (undisputed by anyone I spoke to).

For example, the Cambridge Professor of Mediterranean History, David Abulafia, has contradicted Evans and, like classicists, pointed out the spread of remedial classes at Cambridge:

‘It’s a pity, then, that the director of admissions at Cambridge has proclaimed that the old system [pre-Gove reforms] is good and that AS-levels – a disaster in so many ways – are a good thing because somehow they promote access. I don’t know for whom he is speaking, but not for me as a professor in the same university…

‘[Gove] was quite right about the abolition of the time-wasting, badly devised and all too often incompetently marked AS Levels; these dreary exams have increasingly been used as the key to admissions to Cambridge, to the detriment of intellectually lively, quirky, candidates full of fizz and sparkle who actually have something to say for themselves…

‘Bogus educational theories have done so much to damage education in this country… The effects are visible even in a great university such as Cambridge, with a steady decline in standards of literacy, and with, in consequence, the provision in one college after another of ‘skills teaching’, so that students who no longer arrive knowing how to structure an essay or even read a book can receive appropriate ‘training’… Even students from top ranked schools seem to find it very difficult … to write essays coherently… In the sort of exams I am thinking of, essay writing comes much more to the fore and examiners would be making more subjective judgements about scripts. In an ideal world there would be double marking of scripts.’ Emphasis added.

Judging essay skills is a more nebulous task than judging the quality of mechanics questions. Also, there is less agreement among historians about the sort of things they want to see in school exams compared to mathematicians and physicists who largely (in my experience, I stress, which is limited) agree about the sorts of problems they want undergraduates to be able to solve and the skills they want them to have.

I will quote a Professor of English at Exeter University, Colin MacCabe, whose view of the decline of essay skills is representative of many comments I have heard, but I cannot say confidently that this view represents a consensus, despite his claim:

‘Nobody who teaches A-level or has anything to do with teaching first-year university students has any doubt that A Levels have been dumbed down… The writing of the essay has been the key intellectual form in undergraduate education for more than a century; excelling at A-level meant excelling in this form. All that went by the board when … David Blunkett, brought in AS-levels… A-levels … became two years of continuous assessment with students often taking their first module within three months of entering the sixth form. This huge increase in testing went together with a drastic change in assessment. Candidates were not now marked in relation to an overall view of their ability to mount and develop arguments, but in relation to their ability to demonstrate achievement against tightly defined assessment objectives… A-levels, once a test of general intellectual ability in relation to a particular subject, are now a tightly supervised procession through a series of targets. Assessment doesn’t come at the end of the course – it is the course… In English, students read many fewer books… Students now arrive at university without the knowledge or skills considered automatic in our day… One of the results of the changes at A-level is that the undergraduate degree is itself a much more targeted affair. Students lack of a general education mean that special subjects, dissertations etc are added to general courses which are themselves much more limited in their approach… One result of this is a grade inflation much more dramatic even than A-levels… [T]here is little place within a modern English university for students to develop the kind of intellectual independence and judgment, which has historically been the aim of the undergraduate degree.’ Observer, 22 August, 2004. (Emphasis added.)

If anybody knows of studies on history and other humanities please link in Comments below.

Oxbridge entrance

As political arguments increasingly focused on ‘participation’ and ‘access’, Oxford and Cambridge largely abandoned their own entrance exams in the 1990s. There were some oddities. Cambridge University dropped their maths test and were so worried by the results that they immediately asked for and were given special dispensation to reintroduce it and they have used one since (now known as the STEP paper, used by a few other universities). Other Cambridge departments who wanted to do the same were refused permission and some of them (including the physics department) now use interviews to test material they would like to test in a written exam. Oxford changed its mind and gradually reintroduced admission tests in some subjects. (E.g. It does not use STEP in maths but uses its own test which has more ‘applied’ maths.) Cambridge now uses AS Levels. Oxford does not (but does not like to explain why).

A Levels are largely useless for distinguishing between candidates in the top 2% of ability (i.e. two standard deviations above average). Oxbridge entry now involves a complex and incoherent set of procedures. Some departments use interviews to test skills that are i) either wholly or entirely untested by A Levels and ii) are not explicitly set out anywhere. For example, if you go to an interview for physics at Cambridge, they will ask you questions like ‘how many photons hit your eye per second from Alpha Centauri?’ – i.e. questions that you cannot cram for but from which much information can be gained by tutors watching how students grapple with the problem.

The fact that the real skills they want to test are asked about in interviews rather than in public exams is, in my opinion, not only bad for ‘standards’ but is also unfair. Rich schools with long connections to Oxbridge colleges have teachers who understand these interviews and know how to prepare pupils for them. They still teach the material tested in old exams and other materials such as Russian textbooks created decades ago. A comprehensive in east Durham that has never sent anybody to Oxbridge is very unlikely to have the same sort of expertise and is much more likely to operate on the very mistaken assumption that getting a pupil to three As is sufficient preparation for Oxbridge selection. Testing skills in open exams that everybody can see would be fairer.

I will return to this issue in a later blog but it is important to consider the oddities of this situation. Decades ago, open public standardised tests were seen as a way to overcome prejudice. For example, Ivy League universities like Harvard infamously biased their admissions system against Jews because a fair open process based on intellectual abilities, and ignoring things like lacrosse skills, would have put more Jews into Harvard than Harvard wanted. Similar bias is widespread now in order to keep the number of East Asians low. It is no coincidence that Caltech’s admissions policy is unusually based on academic ability and it has a far higher proportion of East Asians than the likes of Harvard.

Similar problems apply to Oxbridge. A consequence of making exams easier and removing Oxbridge admissions tests was to make the process more opaque and therefore biased against poorer families. The fascinating journey made by the intellectual Left on the issue of standardised tests is described in Steven Pinker’s recent influential essay on university admissions. I agree with him that a big part of the reason for the ‘madness’ is that the intelligentsia ‘has lost the ability to think straight about objective tests’. Half a century ago, the Left fought for standardised tests to overcome prejudice, now many on the Left oppose tests and argue for criteria that give the well-connected middle classes unfair advantages.

This combination of problems is one of the reasons why the Cambridge pure maths department and physics department worked with me to develop projects to redo 16-18 curricula, teacher training, and testing systems. Cambridge is even experimenting with a ‘correspondence Free School’ idea proposed by the mathematician Alexander Borovik (who attended one of the famous Russian maths schools). Powerful forces tried to stop these projects happening because they are, obviously, implicit condemnations of the existing system – condemnations that many would prefer had never seen the light of day. Similar projects in other departments at other universities were kiboshed for the same reason, as were other proposals for specialist maths schools as per the King’s project (which also would never have happened but for the determination of Alison Wolf and a handful of heroic officials in the DfE). I will return to this too.

C. Conclusions

Here are some tentative conclusions.

- The political and bureaucratic process for the introduction of the GCSE and National Curriculum was a shambles. Those involved did not go through basic processes to agree aims. Implementation was awful. All elements of the system failed children. There are important lessons for those who want to reform the current system.

- Given the weight of evidence above, it is hard to avoid the conclusion that GCSEs were made easier than O Levels and became easier still over time. This means that at least the top fifth are aimed aged 14 at lower standards than they would have been aimed at previously (not that O Levels were at all optimal). Many of them spend two years with low grade material and repeating boring drills, in order that the school can maximise its league table position, instead of delving deeper into subjects. Inflation seems to have stopped in the last two years, perhaps temporarily, but by the use of an Ofqual system known as ‘comparable outcomes’ which is barely understood by anybody in the school system or DfE.

- A Levels, at least in maths, sciences, and languages, were quickly made easier after 1988 and not just by enough to keep pass marks stable but by enough to lead to large increases. Even A Level students are aimed at mundane tasks like ‘design a poster’ that are suitable for small children – not near-adults. (As I type this I am looking at an Edexcel textbook for Further Maths A Level which for some reason, Edexcel has chosen to decorate with the picture of a child in a ‘Robin’ masked outfit.)

- The old ‘S’ level papers, designed to stretch the best A Level students, were abandoned which contributed to a decline of standards aimed for among the top 5%.

- University degrees in some subjects therefore also had to become easier (e.g. classics) or longer (natural sciences) in order to avoid increases in failure rates. This happened in some subjects even in elite universities. Remedial courses spread, even in elite universities, to teach/improve skills that were previously expected on arrival (including Classics at Oxford and History at Cambridge). Not all of the problems are because of failures in schools or easier exams. Some are because universities themselves for political reasons will not make certain requirements of applicants. Even if the exam system were fixed, this would remain a big problem. On the other hand, while publicly speaking out for AS Levels, admissions officers also, very quietly, have been gradually introducing new, non-Government/Ofqual regulated, tests for admissions purposes. On this, it is more useful to watch what universities do than what they say.

- These problems have cascaded right through the system and now affect the pipeline into senior university research positions in maths, sciences, and languages. For example, the lack of maths skills among biologists is hampering the development of synthetic biology and computational biology. It is very common now to have (private) discussions with scientists deploring the decline in English research universities. Just in the past few weeks I have had emails from an English physicist now at Harvard and a prominent English neuroscientist giving me details of these developments and how we are falling further behind American universities. As they say, however, nobody wants to speak out.

- It is much easier to see what has happened at the top end of the ability curve, where effects show up in universities, than it is for median pupils. The media also focuses on issues at the top end of the ability curve, A Levels, and the Russell Group.

- Because politicians took control of the system and used results to justify their own policies, and because they control funding, debate over standards became thoroughly dishonest, starting with the Conservative government in the 1980s and continuing to now when academics are pressured not to speak out by administrators for fear of politicians’ responses. When governments are in control of the metrics according to which they are judged, there is likely to be dishonesty. If people – including unions, teachers, and officials – claim they deserve more money on the basis of metrics that are controlled by a small group of people operating an opaque process and controlling the regulator themselves, there is likely to be dishonesty.

An important caveat. It is possible that simultaneously a) 1-8 is true and b) the school system has improved in various ways. What do I mean?

This is a coherent (not necessarily right) conclusion from the story told above…

GCSEs are significantly easier than O Levels. Nevertheless, the switch to GCSEs also involved many comprehensives and secondary moderns dropping the old idea that maybe only a fifth of the cohort are ‘academic’ – the idea from Plato’s Republic of gold, silver, and bronze children, that influenced the 1944 Act. Instead, more schools began to focus more pupils on academic subjects. Even though the standards demanded were easier than in the pre-1988 exams, this new focus (combined with other things) at least led between 1988 and now to a) a reduction in the number of truly awful schools and b) more useful knowledge and skills at least for the bottom fifth of the cohort (in ability terms), and perhaps for more. Perhaps the education of median ability pupils stayed roughly the same (declining a bit in maths) hence the consistent picture in international tests, the King’s results comparing maths in 1978/2008, Shayer’s results and so on (above). Meanwhile the standards demanded by post-1988 A Levels clearly fell (at least in some vital subjects), as the changes in universities testify, and S Level papers vanished, so the top fifth of the cohort (and particularly the +2 standard deviation population, i.e. the top 2%) leave school in some subjects considerably worse educated than in the 1980s. (Given most scientific and technological breakthroughs come from among this top 2% this has a big knock-on effect.) Private schools felt incentivised to perform better than state schools on easier GCSEs and A Levels rather than pursue separate qualifications with all the accompanying problems. There remains no good scientific data on what children at different points on the ability curve are capable of achieving given excellent teaching so the discussion of ‘standards’ remains circular. Easier GCSEs and A Levels are consistent with some improvements for the bottom fifth, roughly stability for the median, significant decline for the top fifth, and fewer awful schools.

This is coherent. It fits the evidence sketched above.

But is it right?

In the next blog in this series I will consider issues of ‘ability’ and the circularity of the current debate on ‘standards’.

Questions?

If people accept the conclusions about GCSEs and A Levels (at least in maths, sciences, and languages, I stress again) how should this evidence be weighed against the very strong desire of many in the education system (and Parliament and Whitehall) to maintain a situation in which the vast majority of the cohort are aimed at GCSEs (or international equivalents that are not hugely different) and, for those deemed ‘academic’, A Levels?

Do the gains from this approach outweigh the losses for an unknown fraction of the ‘more able’?

Is there a way to improve gains for all points on the ability distribution?

I have been told that there is no grade inflation in music exams. Is this true? If YES, is this partly because they are not regulated by the state? Are there other factors? Has A Level Music got easier? If not why not?

What sort of approaches should be experimented with instead of the standard approaches seen in O Levels, GCSEs, and A Levels?

What can be learned from non-Government regulated tests such as Force Concepts Tests (physics), university admissions tests, STEP, IQ tests and so on?

What are the best sources on ‘S’ Level papers and what happened with Oxbridge entrance exams?

What other evidence is there? Where are analyses similar to Warner’s on physics for other subjects?

What evidence is there for university grade inflation which many tell me is now worse than GCSEs and A Levels?