This blog considers two recent papers on the dynamics of scientific research: one in Nature and one by the brilliant physicist, Michael Nielsen, and the brilliant founder of Stripe, Patrick Collison, who is a very unusual CEO. These findings are very important to the question: how can we make economies more productive and what is the relationship between basic science and productivity? The papers are also interesting for those interested in the general question of high performance teams.

These issues are also crucial to the debate about what on earth Britain focuses on now the 2016 referendum has destroyed the Insiders’ preferred national strategy of ‘influencing the EU project’.

For as long as I have watched British politics carefully (sporadically since about 1998) these issues about science, technology and productivity have been almost totally ignored in the Insider debate because the incentives + culture of Westminster programs this behaviour: people with power are not incentivised to optimise for ‘improve science research and productivity’. E.g Everything Vote Leave said about funding science research during the referendum (including cooperation with EU programs) was treated as somewhere between eccentric, irrelevant and pointless by Insiders.

This recent Nature paper gives evidence that a) small teams are more disruptive in science research and b) solo researchers/small teams are significantly underfunded.

‘One of the most universal trends in science and technology today is the growth of large teams in all areas, as solitary researchers and small teams diminish in prevalence . Increases in team size have been attributed to the specialization of scientific activities, improvements in communication technology, or the complexity of modern problems that require interdisciplinary solutions. This shift in team size raises the question of whether and how the character of the science and technology produced by large teams differs from that of small teams. Here we analyse more than 65 million papers, patents and software products that span the period 1954–2014, and demonstrate that across this period smaller teams have tended to disrupt science and technology with new ideas and opportunities, whereas larger teams have tended to develop existing ones. Work from larger teams builds on more recent and popular developments, and attention to their work comes immediately. By contrast, contributions by smaller teams search more deeply into the past, are viewed as disruptive to science and technology and succeed further into the future — if at all. Observed differences between small and large teams are magnified for higher impact work, with small teams known for disruptive work and large teams for developing work. Differences in topic and research design account for a small part of the relationship between team size and disruption; most of the effect occurs at the level of the individual, as people move between smaller and larger teams. These results demonstrate that both small and large teams are essential to a flourishing ecology of science and technology, and suggest that, to achieve this, science policies should aim to support a diversity of team sizes…

‘Although much has been demonstrated about the professional and career benefits of team size for team members, there is little evidence that supports the notion that larger teams are optimized for knowledge discovery and technological invention. Experimental and observational research on groups reveals that individuals in large groups … generate fewer ideas, recall less learned information, reject external perspectives more often and tend to neutralize each other’s viewpoints…

‘Small teams disrupt science and technology by exploring and amplifying promising ideas from older and less-popular work. Large teams develop recent successes, by solving acknowledged problems and refining common designs. Some of this difference results from the substance of science and technology that small versus large teams tackle, but the larger part appears to emerge as a consequence of team size itself. Certain types of research require the resources of large teams, but large teams demand an ongoing stream of funding and success to ‘pay the bills’, which makes them more sensitive to the loss of reputation and support that comes from failure. Our findings are consistent with field research on teams in other domains, which demonstrate that small groups with more to gain and less to lose are more likely to undertake new and untested opportunities that have the potential for high growth and failure…

‘In contrast to Nobel Prize papers, which have an average disruption among the top 2% of all contemporary papers, funded papers rank near the bottom 31%. This could result from a conservative review process, proposals designed to anticipate such a process or a planning effect whereby small teams lock themselves into large-team inertia by remaining accountable to a funded proposal. When we compare two major policy incentives for science (funding versus awards), we find that Nobel-prize-winning articles significantly oversample small disruptive teams, whereas those that acknowledge US National Science Foundation funding oversample large developmental teams. Regardless of the dominant driver, these results paint a unified portrait of underfunded solo investigators and small teams who disrupt science and technology by generating new directions on the basis of deeper and wider information search. These results suggest the need for government, industry and non-profit funders of science and technology to investigate the critical role that small teams appear to have in expanding the frontiers of knowledge, even as large teams rapidly develop them.’

Recently Michael Nielsen and Patrick Collison published some research on the question:

‘are we getting a proportional increase in our scientific understanding [for increased investment]? Or are we investing vastly more merely to sustain (or even see a decline in) the rate of scientific progress?

They explored, inter alia, ‘how scientists think the quality of Nobel Prize–winning discoveries has changed over the decades.’

They conclude:

‘The picture this survey paints is bleak: Over the past century, we’ve vastly increased the time and money invested in science, but in scientists’ own judgement, we’re producing the most important breakthroughs at a near-constant rate. On a per-dollar or per-person basis, this suggests that science is becoming far less efficient.’

It’s also interesting that:

‘In fact, just three [physics] discoveries made since 1990 have been awarded Nobel Prizes. This is too few to get a good quality estimate for the 1990s, and so we didn’t survey those prizes. However, the paucity of prizes since 1990 is itself suggestive. The 1990s and 2000s have the dubious distinction of being the decades over which the Nobel Committee has most strongly preferred to skip, and instead award prizes for earlier work. Given that the 1980s and 1970s themselves don’t look so good, that’s bad news for physics.’

There is a similar story in chemistry.

Why has science got so much more expensive without commensurate gains in understanding?

‘A partial answer to this question is suggested by work done by the economists Benjamin Jones and Bruce Weinberg. They’ve studied how old scientists are when they make their great discoveries. They found that in the early days of the Nobel Prize, future Nobel scientists were 37 years old, on average, when they made their prizewinning discovery. But in recent times that has risen to an average of 47 years, an increase of about a quarter of a scientist’s working career.

‘Perhaps scientists today need to know far more to make important discoveries. As a result, they need to study longer, and so are older, before they can do their most important work. That is, great discoveries are simply getting harder to make. And if they’re harder to make, that suggests there will be fewer of them, or they will require much more effort.

‘In a similar vein, scientific collaborations now often involve far more people than they did a century ago. When Ernest Rutherford discovered the nucleus of the atom in 1911, he published it in a paper with just a single author: himself. By contrast, the two 2012 papers announcing the discovery of the Higgs particle had roughly a thousand authors each. On average, research teams nearly quadrupled in size over the 20th century, and that increase continues today. For many research questions, it requires far more skills, expensive equipment, and a large team to make progress today.

They suggest that ‘the optimistic view is that science is an endless frontier, and we will continue to discover and even create entirely new fields, with their own fundamental questions’. If science is slowing now, then perhaps it ‘is because science has remained too focused on established fields, where it’s becoming ever harder to make progress. We hope the future will see a more rapid proliferation of new fields, giving rise to major new questions. This is an opportunity for science to accelerate.’ They give the example of the birth of computer science after Gödel’s and Turing’s papers in the 1930s.

They also consider the arguments among economists concerning productivity slowdown. Tyler Cowen and others have argued that the breakthroughs in the 19th and early 20th centuries were more significant than recent discoveries: e.g the large-scale deployment of powerful general-purpose technologies such as electricity, the internal-combustion engine, radio, telephones, air travel, the assembly line, fertiliser and so on. Productivity growth in the 1950s was ‘roughly six times higher than today. That means we see about as much change over a decade today as we saw in 18 months in the 1950s.’ Yes the computer and internet have been fantastic but they haven’t, so far, contributed as much as all those powerful technologies like electricity.

They also argue ‘there has been little institutional response’ either among the scientific community or government.

‘Perhaps this lack of response is in part because some scientists see acknowledging diminishing returns as betraying scientists’ collective self-interest. Most scientists strongly favor more research funding. They like to portray science in a positive light, emphasizing benefits and minimizing negatives. While understandable, the evidence is that science has slowed enormously per dollar or hour spent. That evidence demands a large-scale institutional response. It should be a major subject in public policy, and at grant agencies and universities. Better understanding the cause of this phenomenon is important, and identifying ways to reverse it is one of the greatest opportunities to improve our future.’

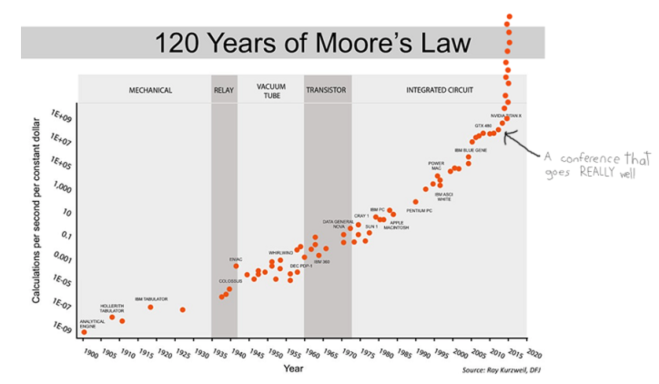

Slate Star Codex also discussed these issues recently. We often look at charts of exponential progress like Moore’s Law but:

‘There are eighteen times more people involved in transistor-related research today than in 1971. So if in 1971 it took 1000 scientists to increase transistor density 35% per year, today it takes 18,000 scientists to do the same task. So apparently the average transistor scientist is eighteen times less productive today than fifty years ago. That should be surprising and scary.’

Similar arguments seem to apply in many areas.

‘All of these lines of evidence lead me to the same conclusion: constant growth rates in response to exponentially increasing inputs is the null hypothesis. If it wasn’t, we should be expecting 50% year-on-year GDP growth, easily-discovered-immortality, and the like.’

SSC also argues that the explanation for this phenomenon is the ‘low hanging fruit argument’:

‘For example, element 117 was discovered by an international collaboration who got an unstable isotope of berkelium from the single accelerator in Tennessee capable of synthesizing it, shipped it to a nuclear reactor in Russia where it was attached to a titanium film, brought it to a particle accelerator in a different Russian city where it was bombarded with a custom-made exotic isotope of calcium, sent the resulting data to a global team of theorists, and eventually found a signature indicating that element 117 had existed for a few milliseconds. Meanwhile, the first modern element discovery, that of phosphorous in the 1670s, came from a guy looking at his own piss. We should not be surprised that discovering element 117 needed more people than discovering phosphorous…

‘I worry even this isn’t dismissive enough. My real objection is that constant progress in science in response to exponential increases in inputs ought to be our null hypothesis, and that it’s almost inconceivable that it could ever be otherwise.‘

How likely is it that this will change radically?

‘At the end of the conference, the moderator asked how many people thought that it was possible for a concerted effort by ourselves and our institutions to “fix” the “problem”… Almost the entire room raised their hands. Everyone there was smarter and more prestigious than I was (also richer, and in many cases way more attractive), but with all due respect I worry they are insane. This is kind of how I imagine their worldview looking:

*

I don’t know what the answers are to the tricky questions explored above. I do know that the existing systems for funding science are bad and we already have great ideas about how to improve our chances of making dramatic breakthroughs, even if we cannot escape the general problem that a lot of low-hanging fruit in traditional subjects like high energy physics is gone.

I have repeated this theme ad nauseam on this blog:

1) We KNOW how effective the very unusual funding for computer science was in the 1960s/1970s — ARPA-PARC created the internet and personal computing — and there are other similar case studies but

2) almost no science is funded in this way and

3) there is practically no debate about this even among scientists, many of whom are wholly ignorant about this. As Alan Kay has observed, there is an amazing contrast between the huge amount of interest in the internet/PC revolution and the near-zero interest in what created the super-productive processes that sparked this revolution.

One of the reasons is the usual problem of bad incentives reinforcing a dysfunctional equilibrium: successful scientists have a lot of power and have a strong personal interest in preserving current funding systems that let them build empires. These empires include often bad treatment of young postdocs who are abused as cheap labour. This is connected to the point above about the average age of Nobel-winners growing. Much of the 1930s quantum revolution was done by people aged ~20-35 and so was the internet/PC revolution in the 1960s/1970s. The latter was deliberate: Licklider et al deliberately funded not short-term projects but creating whole new departments and institutions for young people. They funded a healthy ecosystem: people not projects was one of the core principles. People in their twenties now have very little power or money in the research ecosystem. Further, they have to operate in an appalling time-wasting-grant-writing bureaucracy that Heisenberg, Dirac et al did not face in the 1920s/30s. The politicians and officials don’t care so there is no force to push sensible experiments with new ideas. Almost all ‘reform’ from the central bureaucracy pushes in the direction of more power for the central bureaucracy, not fixing problems.

For example, for an amount of money that the Department for Education loses every week without ministers/officials even noticing it’s lost — I know from experience this is single figure millions — we could transform the funding of masters and PhDs in maths, physics, chemistry, biology, and computer science. There is so much good that could be done for trivial money that isn’t even wasted in the normal sense of ‘spent on rubbish gimmicks and procurement disasters’, it just disappears into the aether without anybody noticing.

The government spends about 250 billion pounds a year with extreme systematic incompetence. If we ‘just’ applied what we know about high performance project management and procurement we could take savings from this budget and put it into ARPA-PARC style high-risk-high-payoff visions including creating whole new fields. This would create powerful self-reinforcing dynamics that would give Britain real assets of far, far greater value than the chimerical ‘influence’ in Brussels meeting rooms where ‘economic and monetary union’ is the real focus.

A serious government or a serious new party (not TIG obviously which is business as usual with the usual suspects) would focus on these things. Under Major, Blair, Brown, Cameron and May these issues have been neglected for quarter of a century. The Conservative Party now has almost no intellectual connection to crucial debates about the ecosystem of science, productivity, universities, funding, startups and so on. I know from personal experience that even billionaire entrepreneurs whose donations are vital to the survival of CCHQ cannot get people like Hammond to listen to anything about all this — Hammond’s focus is obeying his orders from Goldman Sachs. Downing Street is much more interested in protecting corporate looting by large banks and companies and protecting rent-seekers than they are in productivity and entrepreneurs. Having an interest in this subject is seen as a sign of eccentricity to say the least while the ambitious people focus on ‘strategy’, speeches, interviews and all the other parts of their useless implicit ‘model for effective action’. The Tories are reduced to slogans about ‘freedom’, ‘deregulation’ and so on which provide no answers to our productivity problem and, ironically, lie between pointless and self-destructive for them politically but, crucially, play in the self-referential world of Parliament, ‘think tanks’, and pundit-world who debate ‘the next leader’ and which provides the real incentives that drive behaviour.

There is no force in British politics that prioritises science and productivity. Hopefully soon someone will say ‘there is such a party’…

Further reading

If interested in practical ideas for changing science funding in the UK, read my paper last year, which has a lot of links to important papers, or this by two brilliant young neuroscientists who have experienced the funding system’s problems.

For example:

- Remove bureaucracy like the multi-stage procurement processes for buying a lightbulb. ‘Rather than invigilate every single decision, we should do spot checks retrospectively, as is done with tax returns.’

- ‘We should return to funding university departments more directly, allowing more rapid, situation-aware decision-making of the kind present in start-ups, and create a diversity of funding systems.’

- There are many simple steps like guaranteed work visas for spouses that could make the UK a magnet for talented young scientists.

I think looking at the various processes involved more closely will help cast more light on the topic. If the main issue is “can society help more scientific and engineering breakthroughs to happen”, then the arguments and answers should be aimed more directly at this.

ARPA/Parc thrived in part because it considered “other results” (for any reason) as “overhead” for a smaller percentage of breakthrough results. They were shooting for “baseball” i.e. batting about .350 and considered the 65% of “other” acceptable (this worked out extremely well).

In society at large, there needs to be funding for incremental progress as well — much of the myriad of engineering advances have been of this kind (much of “Moore’s Law” progress has been of this kind).

One way to think of the large problem is that “institutional types” much prefer to fund incremental progress because (a) it does help things, and (b) it is more likely to be successful. The big problem is that they have ignored the larger systems principles of both game theory and its relation to investment theory: it really will pay over better over time to invest a small amount of funding to highly improbable investigations (the ARPA/Parc stuff was so improbable that the funders let the researchers define the problems — but this resulted in many tens of trillions of dollars return for the entire world). The risk was only a few hundred millions of dollars over about 15 years.

One could argue that people who don’t understand systems theory and the mathematics of optimizing various kinds of risks should not be in charge of funding research (or perhaps even the government).

This is a key point because governments are a good size to fund well: the small percentages of high risk funding that needs to be allocated are reasonable funding amounts at governmental scale. What needs to happen is a change in how “success” is perceived by both funders and the public.

The other part that is tricky is that the early stages of a new field are not usually very lucrative for the practitioners (who usually get in the new areas for the fun and love of it). This people are also rather indifferent to most notions of “failure” — they are exploring and learning and creating and building, and are quite happy with this.

When a field gets very successful — such as computing — many things happen, some of which start to clog up the rate of progress. And the practitioners very often start to include less pioneering types who are more interested in security. Some of these will help by making incremental progress, but what was “normal” in the pioneering stages is slowly being redefined downwards.

Another factor — in the US at least — is that “ambiance” — for example real estate in areas where a lot of computing is happening — has ballooned much much faster than inflation. The web says inflation in the US has been about a factor of 6 since Parc was formed, but that the price of a typical house in Palo Alto is about 60 times what it was. This amount of disparity changes the goals of those who seek not just to work but also to live in vibrant areas of computing.

However, though Moore’s Law has allowed computer hardware to be inexpensive enough for everyone to have (in their phone) computing that is many times that of a supercomputer a few years ago, computing itself (all the way down) is really software (it’s important to realize that “hardware is software that is crystallized early”).

Moore’s Law has been primarily improvements in putting transistors on silicon, and every organizational part of computing above this has suffered greatly from lack of high risk research funding in “software” (which includes how the transistors are organized to how operating systems and many different kinds of software are organized).

The good news is that there is still Vannevar Bush’s “Endless Frontier” for computing. The bad news is that almost no one is exploring it, and there is almost no funding for exploring it.

But what about “AI” you might ask? And I would say “Yes, the immense limitation of what’s needed has resulted in great improvements in classification, but the large idea of moving from “classification to cognition” has languished. (Given the instrumental and almost blind nature of societies today, this could be a good thing.)

In the larger sense, the ability to create safe organizations of software has not scaled even close to the Moore’s Law scaling of transistors. We should expect to see a number of tragic failures from this over the next few years, as software becomes more intertwined with human safety and life. If I were a funder I would start finding and funding geniuses to make breakthroughs to a saner and more helpful future for computing.

LikeLike

Thanks Alan.

On your last point re safety, I vaguely remember seeing something by you years ago on the question — why hasn’t software security been taken more seriously, why is not incentivised, how would you build computing architectures that really are secure etc… Have I dreamed this — if not I would be very grateful for links…

Best wishes

Dominic

Ps. If you haven’t read, you may find this paper by Omohundro interesting re AI/safety — Autonomous technology and the greater human good, 2014.

It has an interesting idea about the use of mathematical proofs as safety features. Professor Borovik who also commented on this blog might also find this idea interesting…

LikeLike

Hi Dom

A “not really cynical” way to look at standard engineering is that people — and often whole societies — get upset when a bridge or building collapses: people get hurt or worse, and the structures were not supposed to fail. This has tweaked up much of how engineering goes about its business. The loop is aided by forces of nature — air/wind, earthquakes, gravity, etc. — that are strong enough to affect structures — and large ones especially (because of non-linear scalings).

By contrast, the equivalents of “forces” in computers are quite weak these days, and most software failures in the past have been crashes that don’t generally kill people. And the Moore’s Law scaling has removed some of the need for careful design that used to obtain in the past. Add to this the relative newness and obscureness of software (and computers in general), and you have processes whose top priorities are far from safety and pretty much every level, and whose tools and methods are intrinsically quite weak. Software engineers struggling, and safety is rather far down on the list of things they are struggling with.

The idea and use of mathematical proofs to ensure some (small) parts of a system are safe has been in use for more than 50 years. But the larger problems remain, and are not amenable to proofs (any more than proofs help much in understanding Biology in order to improve medicine).

One way to think of it is that the combination of enormous scaling and vast ranges of “degrees of freedom” (both also as in Biology) means that most systems maintain stability through dynamic means (i.e. a good large complex system — like our bodies or the Internet — has “more things going right than going wrong”, and a lot of the “things going right” are there to deal with the things that might go wrong).

Physical structures are also “in dynamic process” but their stabilities are not very complex. But in Biology and computing, the dynamics are such to require a very different kind of system organization to achieve enough relative stability to be viable. This is generally true of all complex systems as they get larger and more intertwined.

Isaac Asimov in his robot stories from the 40s and 50s came up with the idea that intelligent robots would need “dynamic oversight heuristics” that he called “The Three Laws of Robotics” (basically these put the safety of humans first, and then the safety of the robot). But Asimov cleverly also thought up loopholes in the laws (or apparent loopholes) and used these to generate a series of entertaining and thoughtful stories.

With regard to the Indonesian crash of the Boeing 737, they do not know why it happened. The transcripts/recordings of the black boxes **perhaps** indicated that the plane’s SW thought the plane was in a stall and tried to dive to get out of the stall (this is not good if the plane is very near the ground). In any case, there was apparently no simple override switch to allow the pilots to get back complete control. Widening out, it is highly likely that there was no software on the plane that could play the role of Asimov’s Three Laws, or to have a larger sense of what was going on (for example, there is no reason I can think of why diving a plane at the ground when near the ground is going to help anything).

Widening out further, it’s worth contemplating that at this moment in time it is not “actually smart AI” that is likely the threat, but poorly designed systems that are now reaching into many areas where humans demand safety. As Pogo said “we have discovered the enemy, and they are us”.

LikeLiked by 1 person

My experience as a research mathematician, and as someone who for several years served on the Programme Committee (the one which awards research grants) of the London Mathematical Society, a professional organisation of British mathematicians and a charity which distributes grants in support of mathematics research, strongly suggests that “microfunding” provides much better value for money than big grants of the kind favoured by state funding bodies.

Small, by invitation only, workshops of up to about a dozen participants are much more efficient than overcrowded global conferences. The latter developed and were necessary in 1960s and 70s, when increasingly cheap air travel made them an accessible and indispensable tool for getting a general picture of what is going on in a particular research area. Now, the Internet provides a much cheaper option. However, small workshops where people talk to each other in an informal professional jargon which, together with accompanying gestures, facial expressions, etc., is immediately grasped by conversants’ subconscious modules of their minds (the ones that actually do mathematics), and which is very different from the formal language of papers published in academic journals — these workshops retain their value, they allow a brain to speak directly to another brain.

I emphasise that this does not necessarily apply to laboratory-based disciplines — but, in the case of mathematics, the governmental funding is in a sorry state: waste of money with minimal support to the actual research. Support from charities (such as the Leverhulme Trust) is much smaller on scale, but, per pound, much more efficient.

Disclaimer: Views expressed do not necessarily represent position of my employer, or any other person, organisation, or institution.

LikeLike

Thanks Sasha, very interesting…

LikeLike

With respect to the decline in scientific productivity, maybe it’s just that all the easy problems have been solved, and we are left with the extremely difficult to impossible ones. Kuhn argued that new problems are always embedded in a framework created by the most recent scientific revolution in the field (e.g. quantum mechanics, relativity, standard model …). He compared it to a crossword puzzle, where the vast bulk of scientists work at just filling in the words, in a puzzle created by the revolutionaries (Einstein, Maxwell, Gibbs, Schrodinger etc.) It might be that we are down to the last couple of clues. Hopefully, someone will come up with a new revolution. Maybe CRISPR/Cas9 will give us that.

LikeLike

Hi Steve

This is a popular trope, but I don’t think that this is the main problem (and certainly not in my “almost field” of “computer science”). The problems are much more in how funding is done and understanding by the funders what it costs to achieve breakthroughs (i.e. not a lot of money at all, but the percentage of it that will be successful is small — they hate the latter and can’t do the arithmetic on the former to see that if the whole costs are low then breakthroughs are still quite cheap).

Their problems come from feeling that something is wrong if only (say) 35% of the results are breakthroughs . They think this is “not efficient”, but I think this is a hangover from school where students are given easy problems and are expected to solve most of them. The reality in *edge of the art* research is that the problems are not easy, but the ones that get solved then provide enormous leverage to civilization.

LikeLiked by 3 people

“maybe it’s just that all the easy problems have been solved”

I doubt that is all that is going on, but it does seem be part of what’s going on. It is easy for a middle schooler with an experimental budget of a few dozen dollars to do experiments which are utterly inexplicable for 1900 scientific understanding, and largely within reach of 1940 scientific understanding. (Consider e.g. putting sodium in a hot flame, passing the glow through a slit and a prism, and observing the bright spectral lines, as I did in a lab course in middle school. Admittedly is not easy to find a middle schooler who can do the relevant calculations — often fairly involved quantum mechanical calculations — to make any kind of detailed comparison between the 1940 scientific understanding and the observations.) The progress in fundamental physics that we have made since 1940 or so seems to be rather more expensive to reach experimentally: even with the benefit of hindsight, a lot of it seems to be locked behind fancy expensive prerequisites like particle accelerators. The main exception I can think of is our understanding of phase changes: it’s easy to do an cheap experiment showing that water has a sharp boiling point or that magnetism changes with heating, and we understand the ins and outs of that much better than we did in 1940. So it looks to me as though there’s a very real tendency for modern science to be concentrated in areas that are less accessible than a lot of the older scientific progress: not a big enough tendency to easily explain the huge decrease in grant cost effectiveness, but not a small tendency either.

LikeLike

One aspect that I think is important is that establishment politicians only see science funding as a budget line item. It’s also a budget line item that’s hard to link towards favorable voter turnout because the time cycle between investment and a new breakthrough can easily be decades. It’s hard to say, “as an MP, I “fought” for funding that may have been a small component of the solution 10 years for now for battery packs that can supply cars for 3000 miles between recharging.” The current status quo works really well (except for research efficiency).

LikeLike

Seriously Dominic – I think I can speak for 99%+ of scientists in the UK (as a physicist / engineer myself who works in the field – I know them pretty well) to ask …is this is what it comes to?

You have played a very large role in bringing the UK to its knees – which has played its own part in wrecking the reliability and integrity of its once respected scientific institutions – on a world scale. And yet all you can do is focus on your ARPA process hypotheses – again! There is a galactic size elephant in the room you forgot to mention.

Top science tip for you : If ever you were to visit one of our research accelerators please don’t linger too long. Once identified you are likely to quickly find yourself bundled inside one of the diagnostic ports or target stations and subjected to a very high – and very lethal – dose of radiation.

LikeLiked by 1 person

Don’t be too harsh on scientists’ productivity. Even Moore’s law isn’t as great on costs as you might hope. Are you aware of Moore’s second law, also known for a long time?

https://en.wikipedia.org/wiki/Moore%27s_second_law

The cost of semiconductor fabs double every few years. It’s not exactly the same as semiconductor R&D costs doubling, but it’s still a form of capital costs for the improvement.

LikeLike

You may be interested in Terence Kealey’s book on ‘The Economic Laws of Scientific Research’ – he also questions the value of a lot of public science funding. Also, ‘Faster, Better, Cheaper’ by Howard E McCurdy ~ charts NASA’s transistion from big to smaller deep space probe projects.

LikeLike